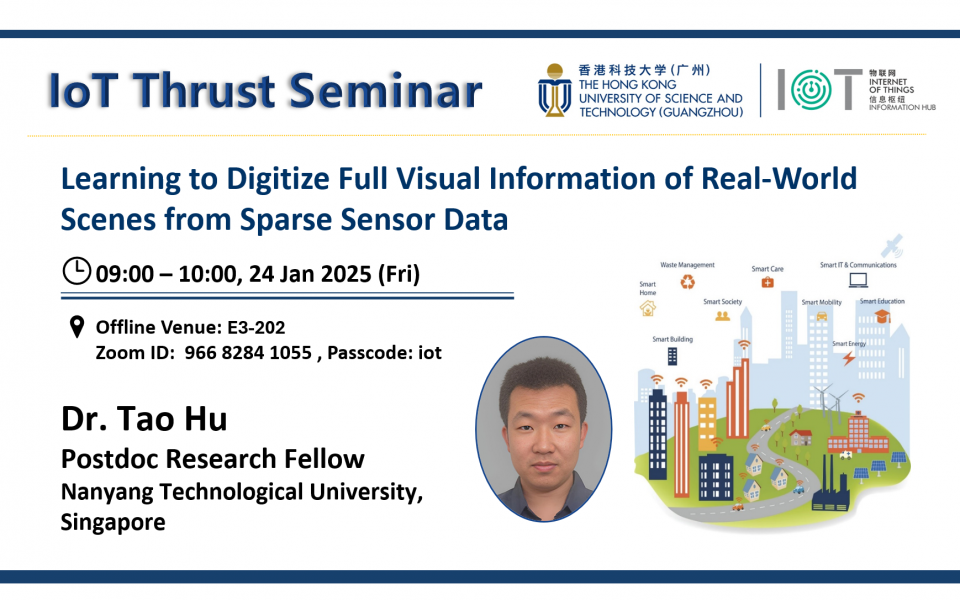

IoT Thrust Seminar | Learning to Digitize Full Visual Information of Real-World Scenes from Sparse Sensor Data

Supporting the below United Nations Sustainable Development Goals:支持以下聯合國可持續發展目標:支持以下联合国可持续发展目标:

Full visual information encompasses the perceptible information to human vision in a real scene, including geometry, motion, and appearance. The digitization (e.g., 3D reconstruction and generation) of visual information is widely used in various scenarios such as smart homes, smart cities, industry 4.0, autonomous driving, robot navigation, augmented reality (AR) / virtual reality (VR), and entertainment industry.

However, digitizing real-world scenes from sparse sensor data (e.g., partial point clouds scanned by LiDAR or single-view RGB images captured by a camera) is challenging due to occlusions, as well as the irregularity and complexity of 3D scenes, which result in the lack of real-world 3D data. In this talk, I will discuss my research on digitizing full visual information for static and dynamic scenes by leveraging both data-driven and physics-driven technologies.

First, I will present my work on reconstructing the 3D geometry and appearance of diverse real-world static scenes from only one single-view RGB camera or depth sensor. At the core of the research is the inference of full 3D information from partial observations by leveraging priors of a large 3D synthetic dataset. Our world is rarely static, and it is of major interest to reconstruct and understand specifically dynamic humans. I will discuss my research on scaling up 3D human creation from 2D data (e.g., images and videos captured by smartphone cameras) without relying on real-world 3D human data. Additionally, I will share contributions of my work on the perception, representation, reconstruction, generation, and multimodal editing of 3D humans to automate the creation of 3D digital humans using sparse-view cameras.

Finally, I will conclude by outlining my future teaching plans and research plans.

Dr. Tao Hu is a postdoc research fellow with S-Lab and MMLab@NTU, at Nanyang Technological University in Singapore, working with Prof. Ziwei Liu. He obtained his Ph.D. in computer science at the University of Maryland, College Park (USA) advised by Prof. Matthias Zwicker (Chair of the CS Department). During his Ph.D., he visited the Max Planck Institute for Informatics in Saarbrücken, Germany under the guidance of Prof. Christian Theobalt, and visited 3DV group at Tsinghua University under the guidance of Prof. Yebin Liu. He also conducted research at Microsoft Research Asia, Shanghai AI Lab, and ByteDance AI Lab during his internship.

His research focuses on human-centric visual computing (HCVC), including 3D scene perception, reconstruction, and generation, with a particular emphasis on 3D human understanding, motion capture, and reconstruction. Currently, he is extending HCVC to interdisciplinary applications, such as smart life (HCVC + IoT), smart industry (HCVC + robotics and manufacturing), and entertainment industry (AI Generated Content).