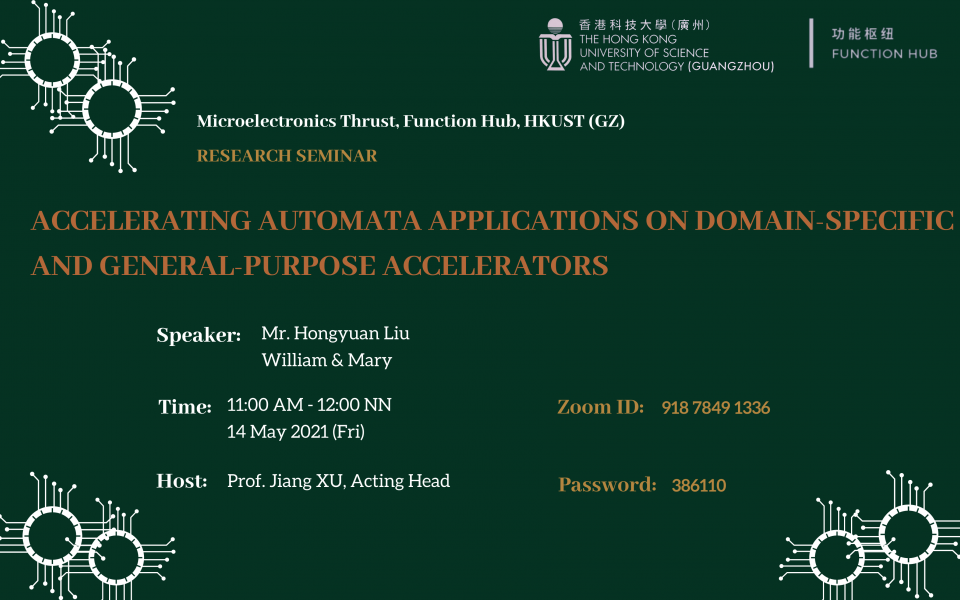

Public Research Seminar by Microelectronics Thrust - Accelerating Automata Applications on Domain-specific and General-purpose Accelerators

We focus on a fundamental computation model, automaton, used widely in applications from various domains such as network intrusion detection, machine learning, and parsing. Large-scale automata processing is challenging for traditional von Neumann architectures. To this end, many accelerators are proposed---Micron's Automata Processor (AP) is an example. However, as a spatial architecture, AP cannot handle large automata programs without repeated reconfiguration and re-execution, limiting its performance severely.

We observed that many automata states are never-enabled in the execution but still configured on AP chips. Such ``cold'' states underutilize AP. To address this issue, we propose a lightweight offline profiling technique to predict which states are cold and partition the cold states out of AP. We develop SparseAP, with minimal hardware changes, to handle the misprediction efficiently. Our software and hardware co-optimization obtains a 2.1x speedup over baseline AP execution across evaluated applications.

While AP is performant, it is not easily available. By contrast, GPUs serve as general-purpose accelerators in lots of computer systems but execute automata applications slowly. Can we reduce the performance gap between GPU and AP? We identify excessive data movement in the GPU memory hierarchy and propose optimization techniques to reduce the data movement. Although our optimization techniques significantly alleviate these memory-related bottlenecks, a side effect is the static work assignment to cores. This leads to poor compute utilization since GPU cores are wasted on idle automata states. Therefore, we propose a novel dynamic scheme that effectively balances compute utilization with reduced memory usage. Our combined optimizations provide a significant improvement over the previous state-of-the-art GPU implementations of automata. Moreover, they enable current GPUs to outperform Automata Processor across several applications while performing within an order of magnitude for the rest of the applications.

Hongyuan Liu is a Ph.D. candidate in the Department of Computer Science at William & Mary advised by Dr. Adwait Jog. He works in the areas of computer architectures and high-performance computing. Specifically, his current research focuses on accelerating emerging applications with common computation models (e.g., automaton, graph) and co-design them with domain-specific accelerators (DSAs) and general-purpose accelerators (e.g., GPUs). His research has appeared in ASPLOS, MICRO, PACT, and TACO, and was awarded best paper in ICPADS 2016.

Hongyuan Liu received a B.Eng degree from Shandong University and a Master's degree from the University of Hong Kong. He was an intern at Intel in Fall 2019 and has been a visiting student at Argonne National Laboratory since January 2021. He was a software engineer at Baidu.

For enquiries, please contact Miss Annie WU (+86-20-36665041; anniewu@ust.hk )